Right now, AutoGPT and BabyAGI don't really work. If you give them a complex task they go off the rails and get stuck in loops, so most people prefer to use the models directly rather than an agent scaffold.

Will there be advancements in scaffolding this year or new models such as GPT-5 that solve these problems and allow them to mostly work? I will try the agents myself, and factor in whether it is common for people to use LLM agent frameworks for productivity assistant tasks.

@traders models like o1 and Gemini 2.0 Flash Thinking seem like they really unlocked the ability I was referring to, the ability to do improve their performance by doing reasoning and self-reflection, without going off the rails and getting stuck in weird loops like AutoGPT did. As always, the Bitter Lesson was correct, and the solution was that instead of having a human-created prompt for each scenario like AutoGPT, to use RL to get the AI to learn to self-prompt and not get stuck. The lack of visibility into the o1 chain of thought makes it tricky to see how much AutoGPT-style self-prompting and self-reflection it’s doing. But the success of these models is still a strong argument to resolve Yes.

Autonomous coding agents seem to perform much better than a single-pass LLM on coding benchmarks, but I'm not sure how many iterations of self-reflection they do, and I notice that self-reflective programming tools don't seem to be very popular in real use. On research, writing, and other general tasks than a standard LLM. I'm not a ChatGPT Plus subscriber so I haven't used o1 myself and am curious what other people's experiences are using o1 on research or writing tasks. I'm currently trying out Gemini 2.0 Flash Thinking and o1-mini powered Perplexity and my first impressions are positive. But the lack of widespread adoption of these reasoning models or self-reflection tools outside of coding is an argument to resolve No, but maybe people just haven’t caught on yet.

I'm currently leaning towards resolving this question 50% or 75% but am open to arguments.

@ahalekelly o1 is an improvement although I wouldn't consider it an auto gpt agent.

To be an auto gpt style agent it needs to be able to run mostly autonomously for an extended period of time. o1 does not do this it runs for however long it thinks and then provides a single output like a model not like an agent. You can however place o1 in a loop like autogpt, it works better than older models but still loses track of the task and can't really evaluate its own work.

Ignoring o1 for a second I would consider devin to be the state of the art autogpt style agent. The team I am on has not found devin to be useful, it can do simple tasks, but can't really do much more than the base models it's using.

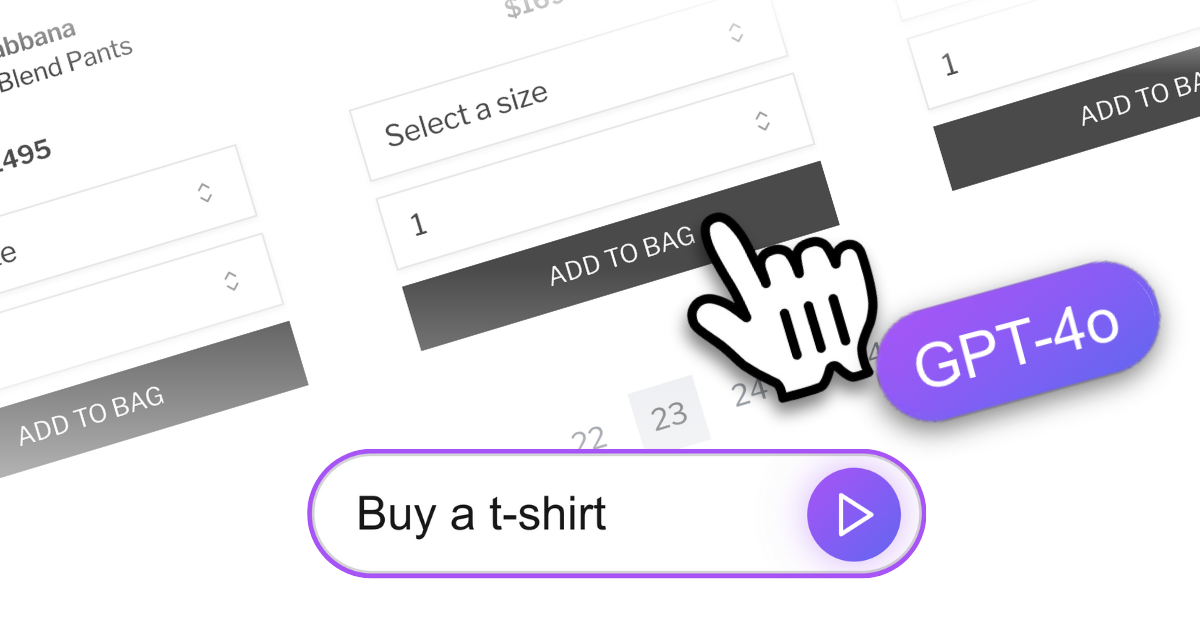

https://theaidigest.org/agent a demo of an AI agent you can give tasks to, right inside a webpage. useful for getting a sense of current capabilities!

Devin and swe-agent are apparently doing better than RAG on coding tasks, I haven’t looked at how they work yet but they made me update higher on this question

The type of task I had in mind when writing this was like “write me a summary of the current state of this industry or field of study” and the agent would figure out the best workflow, do web searches, read pages, do more web searches based on what it found, and ultimately complete the task with a better result than a single pass web search and summarization

Another way of framing the criteria is “Will AI agents be worth using instead of using the LLM directly for a significant portion of tasks”

@RemNi my whole and ongoing experience with agent frameworks are that they simply goes nuts and spending the same amount of time actually coding a system is far more easier.

I assume so, but just to make sure: does this resolve YES if new, better agent frameworks get developed on top of LLMs (as opposed to AutoGPT and BabyAGI being improved)?

Also, now that I'm really thinking about this, how do you intend to resolve the question?

@inaimathi Yes, new agent frameworks that operate similarly will count.

I'm not sure I understand what you mean by how I intend to resolve. It's fundamentally a subjective assessment of the usefulness and reliability of the agents, but let me know if you have specific questions about what would count

@ahalekelly Yeah; that's what I was getting at (I wanted to know if you had specific metrics/test processes in mind or if it was going to be a subjective resolution).