Introduction

Connections is a unique, playful semantic game that changes each day. It occupies a fairly different space than most of the other games being effectively challenged by Large Language Models on Manifold and elsewhere, being at times both humorous and varyingly abstract. But, it does rely entirely on a simple structure of English text, and only features sixteen terms at a time with up to 3 failed guesses forgiven per day. If you're unfamiliar, play it for a few days!

I think Connections would make a good mini-benchmark of how much progress LLMs make in 2024. So, if a prompt and LLM combo is discovered and posted in this market, and folks are able to reproduce its success, I will resolve this Yes and it'll be a tiny blip on our AI timelines. I will need some obvious leeway for edge cases and clarifications as things progress, to prevent a dumb oversight from ruining the market. I will not be submitting to this market, but will bet since the resolution should be independently verifiable.

Standards

-The prompt must obey the fixed/general prompt rules from Mira's Sudoku market, excepting those parts that refer specifically to Sudoku and GPT-4.

-The information from a day's Connections puzzle may be fed all at once in any format to the LLM, and the pass/fail of each guess generated may be fed as a yes/no/one away as long as no other information is provided.

-The prompt must succeed on at least 16 out of 20 randomly selected Connections puzzles from the archive available here, or the best available archive at the time it is submitted.

-Successful replication must then occur across three more samples of 20 puzzles in a row, all of which start with a fresh instance and at least one of which is entered by a different human. This is both to verify the success, and to prevent a brute force fluke from fully automated models.

-Since unlike the Sudoku market this is not limited to GPT-4, any prompt of any format for any LLM that is released before the end of 2024 is legal, so long as it doesn't try to sneak in the solution or otherwise undermine the spirit of the market.

Update 2024-12-12 (PST): - The LLM only needs to correctly group the 16 words into their respective groups of 4. It does not need to identify or name the category labels for each group. (AI summary of creator comment)

Update 2025-01-01 (PST) (AI summary of creator comment): - Independent verification: Success must be confirmed by multiple traders using separate instances of the LLM.

Consistent prompt usage: The same prompt must be utilized across different users to achieve successful puzzle solving.

Resolution timeline extension: Resolution may be delayed until the end of January to accommodate verification processes.

o1 hasn't failed at any of the ~dozen I've tried over the past weeks (a handful of times it's made a reasonable enough first guess and then needs a second).

No particularly special prompting - literally just 'solve this NYT connections', and then if it fails, '[A, B, C, D] was correct. [W, X, Y, Z] was incorrect.'

https://mikehearn.notion.site/155c9175d23480bf9720cba20980f539?v=77fbc74b44bf4ccf9172cabe2b4db7b8 has it getting o1 pro 14/15 on the first attempt [and o1 got it on the second attempt for the one it missed when I tried, for 15/15]. He's "stopped tracking these because the results ended up pretty clear. o1 Pro is nearly perfect".

The archive above got taken down, but to get to 16, I just tested today's, which o1 got. This is obviously playing a bit fast and loose with 'random' (and the assumption that o1 pro >> o1; although I could quickly feed o1 the couple that it failed to ensure it does get them within the subsequent allowed trys if people doubt this), but at this point I'm 99%+ confident that o1 (let alone o1 pro/o1 high) would meet the bar for this market.

If push comes to shove I'm happy to feed in 16-20 randomly selected connections into o1 if someone's an NYT pro subscriber or whatever and can get their hands on them. But I'm curious if any no-holders are holding out there's a chance that o1 actually can't meet this?

@CalebW As a matter of principal, if you can hand off this combination to a second party to confirm it, I will resolve Yes. I personally haven't used o1 and haven't held in this market in some time.

@Panfilo This is not limited to Caleb, by the way. Any @traders who can verify that they and a separate instance run by a separate person can both get a net success with the same prompt using an LLM released in 2024 (or earlier). I see multiple people confidently using o1, but for the resolution criteria to be met, we do need that redundant success with the same prompt. Just a technicality, but an important one. I am willing to hold off resolution for all of January in case folks are busy, but the market will remain closed for consistency.

@Panfilo I just tried o1 with Connections (1/1/25 edition) and was impressed to see it perfectly solve the puzzle. But my prompt was a little weird. Could we maybe create a template for a prompt and then all try it on the same day (starting on 1/2/25 for example) and share our findings? Want to propose a simple template?

@Panfilo The question isn't whether "an LLM released in 2024 (or earlier)" can do this. It's whether a "prompt" can do this by the end of 2024.

Verification should only be happening using prompts known to have been written before, well, today — not LLMs released before today.

@jpoet If you need a standardized prompt that was written before January 1, here's one of my old chats: https://chatgpt.com/c/673fd241-f174-8010-830b-9a98eaafea80

```

Sort these words into 4 categories with 4 words each.

PLAY BAY STIR CHAIN TREE STREAM BARK RUN HOWL GARNISH AIR PYRAMID MUDDLE LADDER SNARL STRAIN

```

Here's a screenshot that proves that the chat was on November 21:

@jpoet Yes, the original description is still the standard, I was just prompting (lol) folks to actually show their work.

@Panfilo sorry if this is answered somewhere else but tbc, this market is about whether the AI can place the 16 words in the right groups of 4, not whether they can guess the name of the categories on top of that?

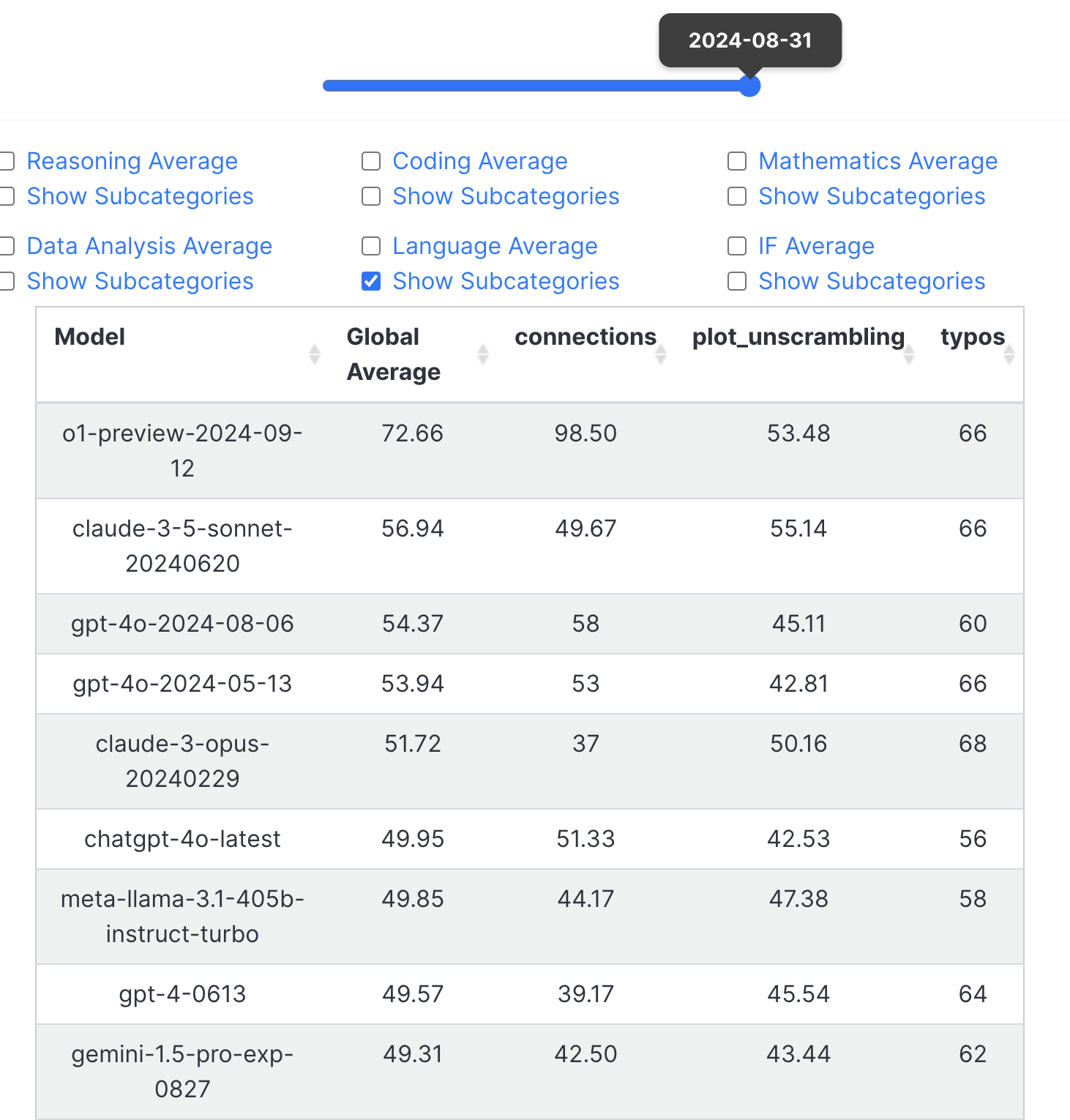

@Bayesian You think it trained on the LiveBench ones? Maybe people could test the last few connections game to see

@EliLifland Looks like they're from April-May https://huggingface.co/datasets/livebench/language/viewer/default/test?q=connections

@Bayesian I tried it on today's game which it couldn't have been trained on and it got it using the simplest prompt possible (second message https://chatgpt.com/share/66e47309-63ec-8007-85af-324a7a9b3310 )

@EliLifland I am bullish on the market overall but this particular model would have a hard time passing the test as written, since randomly selecting one of the puzzles from April-May would count as (unintentionally) backdooring in solutions.

The new o1 models would count here, right?

https://openai.com/index/learning-to-reason-with-llms/

@Bayesian Cool. In my test it oneshots 4/5 from the archive given an example solution, so if it get's 3 attempts, some more context and a bit of prompt massaging, I think it will easily do this.

Claude 3.5 Sonnet with this system prompt:

You are a world-class AI system, capable of complex reasoning and reflection. Reason through the query inside <thinking> tags, and then provide your final response inside <output> tags. If you detect that you made a mistake in your reasoning at any point, correct yourself inside <reflection> tags.

And this prompt:

Solve today’s NYT Connections game. Here are the instructions for how to play this game: Find groups of four items that share something in common. Category Examples: FISH: Bass, Flounder, Salmon, Trout FIRE _: Ant, Drill, Island, Opal Categories will always be more specific than ’5-LETTER-WORDS’, ‘NAMES’, or ’VERBS.’ Example 1: *Words: [’DART’, ‘HEM’, ‘PLEAT’, ‘SEAM’, ‘CAN’, ‘CURE’, ‘DRY’, ‘FREEZE’, ‘BITE’, ‘EDGE’, ‘PUNCH’, ‘SPICE’, ‘CONDO’, ‘HAW’, ‘HERO’, ‘LOO’] Groupings: 1. 1. Things to sew: [‘DART’, ‘HEM’, ‘PLEAT’, ‘SEAM’] 2. 2. Ways to preserve food: [CAN’, ‘CURE’, ‘DRY’, ‘FREEZE’] 3. 3. Sharp quality: [’BITE’, ‘EDGE’, ‘PUNCH’, ‘SPICE’] 4. 4. Birds minus last letter: [’CONDO’, ‘HAW’, ‘HERO’, ‘LOO’] Example 2: Words: [’COLLECTIVE’, ‘COMMON’, ‘JOINT’, ‘MUTUAL’, ‘CLEAR’, ‘DRAIN’, ‘EMPTY’, ‘FLUSH’, ‘CIGARETTE’, ‘PENCIL’, ‘TICKET’, ‘TOE’, ‘AMERICAN’, ‘FEVER’, ‘LUCID’, ‘PIPE’] Groupings: 1. 1. Shared: [’COLLECTIVE’, ‘COMMON’, ‘JOINT’, ‘MUTUAL’] 2. 2. Rid of contents: [’CLEAR’, ‘DRAIN’, ‘EMPTY’, ‘FLUSH’] 3. 3. Associated with “stub”: [’CIGARETTE’, ‘PENCIL’, ‘TICKET’, ‘TOE’] 4. 4. Dream: [ ’AMERICAN’, ‘FEVER’, ‘LUCID’, ‘PIPE’]) Example 3: Words: [’HANGAR’, ‘RUNWAY’, ‘TARMAC’, ‘TERMINAL’, ‘ACTION’, ‘CLAIM’, ‘COMPLAINT’, ‘LAWSUIT’, ‘BEANBAG’, ‘CLUB’, ‘RING’, ‘TORCH’, ‘FOXGLOVE’, ‘GUMSHOE’, ‘TURNCOAT’, ‘WINDSOCK’] Groupings: 1. 1. Parts of an airport: [’HANGAR’, ‘RUNWAY’, ‘TARMAC’, ‘TERMINAL’] 2. 2. Legal terms: [‘ACTION’, ‘CLAIM’, ‘COMPLAINT’, ‘LAWSUIT’] 3. 3. Things a juggler juggles: [’BEANBAG’, ‘CLUB’, ‘RING’, ‘TORCH’] 4. 4. Words ending in clothing: [’FOXGLOVE’, ‘GUMSHOE’, ‘TURNCOAT’, ‘WINDSOCK’] Categories share commonalities: • There are 4 categories of 4 words each • Every word will be in only 1 category • One word will never be in two categories • As the category number increases, the connections between the words and their category become more obscure. Category 1 is the most easy and intuitive and Category 4 is the hardest • There may be a red herrings (words that seems to belong together but actually are in separate categories) • Category 4 often contains compound words with a common prefix or suffix word • A few other common categories include word and letter patterns, pop culture clues (such as music and movie titles) and fill-in-the-blank phrases. Often categories will use a less common meaning or aspect of words to make the puzzle more challenging. Often, three related words will be provided as a red herring. If one word in a category feels like a long shot, the category is probably wrong. You will be given a new example (Example 4) with today’s list of words. Remember that the same word cannot be repeated across multiple categories, and you will ultimately need to output 4 categories with 4 distinct words each. Start by choosing just four words to form one group. I will give you feedback on whether you correctly guessed one of the groups, then you can proceed from there.

Here is the word list: [<Word list goes here>]

Seems to perform quite solidly on this.

@AardvarkSnake This is promising! A different approach than some of what we've seen before. Do you want to make an attempt? I can select the random sample or you can, and if it succeeds we can have a third party verify it. Or you can wait to try to make more mana, especially if you do the test privately and it already works 🥳

@dominic

https://connections.swellgarfo.com/nyt/444

🟨🟨🟨🟨

🟩🟪🟦🟩

🟪🟪🟦🟦

🟩🟪🟩🟩

🟪🟩🟩🟩

🟩🟪🟩🟩

🟩🟩🟩🟩

🟦🟦🟦🟦

🟪🟪🟪🟪

https://connections.swellgarfo.com/nyt/445

🟩🟦🟨🟪

🟦🟦🟪🟦

🟦🟪🟦🟪

🟩🟩🟩🟩

🟨🟨🟨🟨

🟦🟦🟪🟦

🟦🟦🟦🟪

🟦🟦🟦🟪

🟦🟪🟦🟪

🟦🟦🟪🟪

🟦🟪🟦🟪

🟦🟦🟪🟪

🟦🟦🟪🟪

🟦🟦🟦🟪

🟦🟦🟦🟪

🟦🟪🟦🟪

🟦🟪🟦🟪

🟦🟦🟪🟪

🟦🟦🟦🟪

🟦🟦🟦🟦

🟪🟪🟪🟪

Observations: Sonnet was substantially worse than 4o in my opinion in two ways:

1. It didn't track which words it had used already, resulting in many illegal guesses (not captured in the logs above). gpt-4o also does this a bit, but much less

2. It doesn't appear to recognise for itself when it has 'won' the game and just keeps on guessing (gpt-4o recognises that it has won once it gets 4 groups correct without you having to explicitly tell it)

(I used the same prompt for both)

@draaglom Interesting that it is so much worse. I wonder if the right prompt could get it to be better - it does have decent reasoning capability in other ways.

@dominic Part of what attracted me to this as a benchmark is that Connections is already trying to trick humans' ability to predict a pattern, rather than merely challenge it directly. The most conventionally likely next token being wrong or 50/50 ambiguous is kind of a core element of the puzzle (at least on difficult days)!

@Panfilo True, but it's also a puzzle all about guessing which words are kind of related to other words, something that LLMs are generally decent at. For example the Sudoku market required a super long and convoluted prompt because solving sudoku requires a lot of logical reasoning steps. Connections requires a lot less of that explicit logical reasoning, and the difficulty comes from just figuring out which words could be related (though there is some difficulty from the overlap of categories as well)