This market made in response to this article. Quote:

"

One-hour AGI: Beating humans at problem sets/exams, composing short articles or blog posts, executing most tasks in white-collar jobs (e.g., diagnosing patients, providing legal opinions), conducting therapy, etc.

One-day AGI: Beating humans at negotiating business deals, developing new apps, running scientific experiments, reviewing scientific papers, summarizing books, etc.

One-month AGI: Beating humans at carrying out medium-term plans coherently (e.g., founding a startup), supervising large projects, becoming proficient in new fields, writing large software applications (e.g., a new operating system), making novel scientific discoveries, etc.

One-year AGI: These AIs would beat humans at basically everything. Mainly because most projects can be divided into sub-tasks that can be completed in shorter timeframes.

Although it is more formal than the definitions provided in the previous section, the (t,n)-AGI framework does not account for how many copies of the AI run simultaneously, or how much compute.

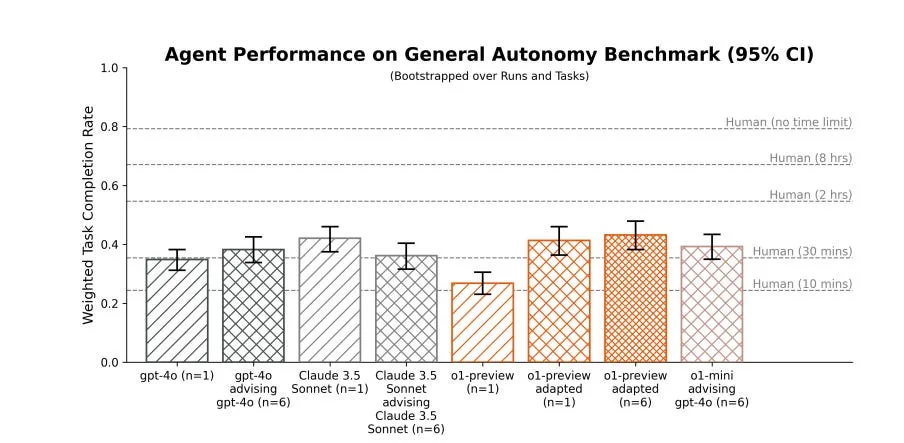

As of the third quarter of 2023, we can establish a rough equivalence “from informal initial experiments, our guess is that humans need about three minutes per problem to be overall as useful as GPT-4 when playing the role of trusted high-quality labor.”(source) So existing systems can roughly be believed to qualify as one-second AGIs, and are considered to be nearing the level of one-minute AGIs.

They might be a few years away from becoming one-hour AGIs.

"

I took objection to the article stating "might be a few years away". To me, that implies probably at least more than 2 years, perhaps more like 10 years. I think it will be less than 2 years. So here I am, putting my money where my mouth is.

Edit:

Based on questions asked, here are some more details:

This is AI (plus any tools the human is granted, e.g. web search, but excluding other AI) versus human (web search, code ide, and calculator, but no explicit AI).

Secret knowledge that the human has which the AI can't look up on the web wouldn't count. This is about ability rather than knowledge.

The human for the comparison should be an above-average, competent professional, but not a remarkable genius.

Update 2025-03-01 (PST) (AI summary of creator comment): Resolution Criteria:

The comparison is between:

AI (including tools such as web search, excluding other AI)

Human (equipped with web search, code IDE, and calculator, but no explicit AI)

The tasks considered are those that a competent professional can perform on a computer within one hour.

The market resolves YES if the state-of-the-art (SotA) AI can perform any of these tasks with high reliability, where the failure rate is comparable to that of a competent human.

@ikoukas Yeah, though not to like, expert speed-runner level. Just like a typical competent person who was previously unfamiliar with that specific game but familiar with computer games in general.

@NathanHelmBurger Whatever the benchmark, it seems unlikely to be representative. If truly AI could do 30 minute tasks comparably to typical humans, you'd see massive adoption in areas like consumer support. Yet we don't see that.

@AIBear I work as a developer and probably over half of my tasks are doable in 30 minutes assuming knowledge of the codebase, I've tried using multiple SOTA LLMs to automate my tasks yet none of them were even close to being consistent.

From what I've seen with experiments in other areas, when actually deploying AI systems they only work well with human supervision.

@ProjectVictory Yeah, that seems fair. I do think that 30 min tasks can occasionally get accomplished... But any little thing goes wrong (including an unlucky generation step) and it breaks. I'd estimate like 10% chance of working? That's pretty crap reliability.

@AIBear yeah, the reliability is currently a big obstacle. Also though, I do expect indicators like corporate adoption may lag behind by quite a bit. With progress moving so rapidly, a half-year lag or more on a trailing indicator makes that indicator not so helpful.

@EliezerYudkowsky good question. I was thinking above-average, competent professional, but not a remarkable genius. Does that clarify?

I am personally interested in gaming. One guy in Dead by Daylight won 1000 matches in a streak. That game involves a lot of fifty-fifties, where you have to just guess what opponent does based on his personality earlier in match.

Currently people are better at games than ai if learning iterations is the benchmark. Human needs 2-10 matches to understand chess, neural networks need to simulate millions of matches to grasp the basic concepts.

Opening a business and similar tasks are not interesting. Wisdom about those things is definitely in training data. But facing A NEW challenge, a new game, being able to learn on the go is interesting, is closer to human brains.

I have no doubt AGI will appear soon, but I look forward to the next step in the research - system with no memory which is dropped in the real world and is able to learn instead of being taught.

@KongoLandwalker I absolutely agree that that is something currently lacking which will be needed for true AGI.

This market specifically is trying to aim at close-to-but-not-quite-AGI with a clear operationalization. I can totally imagine an advanced LLM at the end of 2026 having memorized so many facts and simple heuristics that it can compentently do 1-hour computer tasks with high reliability, and yet would still fail to reason about and quickly learn truly novel out-of-distribution tasks.

I'm not saying I do think AI will plateau at that point, just that this market is about asking if AI can get at least that far by end of 2026.

Thanks for sharing! Great market and that is for referring to the source! Btw, in order to win, does AI have to be better than humans without that AI or also with humans + that AI (making humans basically not only inferior but totally useless)? And how can you measure whether AI is able to do ANY task (I can expect that at least some tasks will require exclusive knowledge that only some specific people have, say about a corporate system or about a very niche topic).

@SimoneRomeo This is AI (plus any tools the human is granted, including web search but excluding other AI) versus human (web search, code ide, and calculator, but no explicit AI).

Secret knowledge that the human has which the AI can't look up on the web wouldn't count. This is about ability rather than knowledge.

The human for the comparison should be an above-average, competent professional, but not a remarkable genius.

@NathanHelmBurger I get the idea, but drawing the line between ability and knowledge is very difficult. I guess you'd count writing as an ability but you still have to study and practice. Same to be a lawyer or most of other tasks. As a comparison, if you took a totally smart human hunter gatherer and you gave them a computer, Devin could already be more competent than them right now

@SimoneRomeo Yeah, there is definitely blurriness with the line. LLMs today highlight this by being so much better at factual recall than at practical problem solving.

As I said in my response to Eliezer elsewhere in the comments, the target for this market is "above-average, competent professional, but not a remarkable genius."

For any given job you might conceivably pay a competent professional in modern society to do on a computer for an hour, the market resolves YES if there is a SotA that can do any such task with high reliability. (Failure rate should be similar to the rate a competent human would fail at.)

A lot of text-based tasks are getting towards an hour as on start of 2025, but with terrible reliability. Claude computer use shows that full computer use is feasible, but has even worse reliability. Progress is being made, but there is still a long ways to go.

@NathanHelmBurger my concern is that companies (particularly in creative industries) have specific ways to do things that you only learn by being there years. Without this kind of cultural knowledge new hires tend to rework projects a lot.

Instead of human, we could replace the word with "freelancer", and I think it's more applicable. A freelancer is a supposedly skilled person who doesn't know about your specific company culture and it can be better compared to an AI model.