@CateHall writes:

Will I agree that she was right? I'll resolve to YES if I think this has happened before the end of 2025; NO if obviously not; and open to partial resolutions.

Warning: this market may inherently be kind of fuzzy/vibes-based; if anyone has ideas for more objective criteria will answer the core question rather than getting into technicalities, please speak up!

Sources I will likely consult for a resolution, if they're open to advising:

My own information diet (friends, substack/blogs, LW, X/twitter)

Cate Hall herself

Manifold moderators

Some kind of LLM judge

https://www.youtube.com/watch?v=5CKuiuc5cJM Seems like a good sign

Just noting that as of right this second, I think "if anyone builds it" has had less of a splash than expected - guessing that AI 2027 and Situational Awareness will end up with bigger impacts on discourse.

It's still early for iabied though!

@jessald There's a difference though between people who are anti-AI in the sense that they don't want to use it, vs anti-AI in the sense of wanting regulation to prevent it from killing everyone.

I think that's the kind of preference cascade this market is trying to measure.

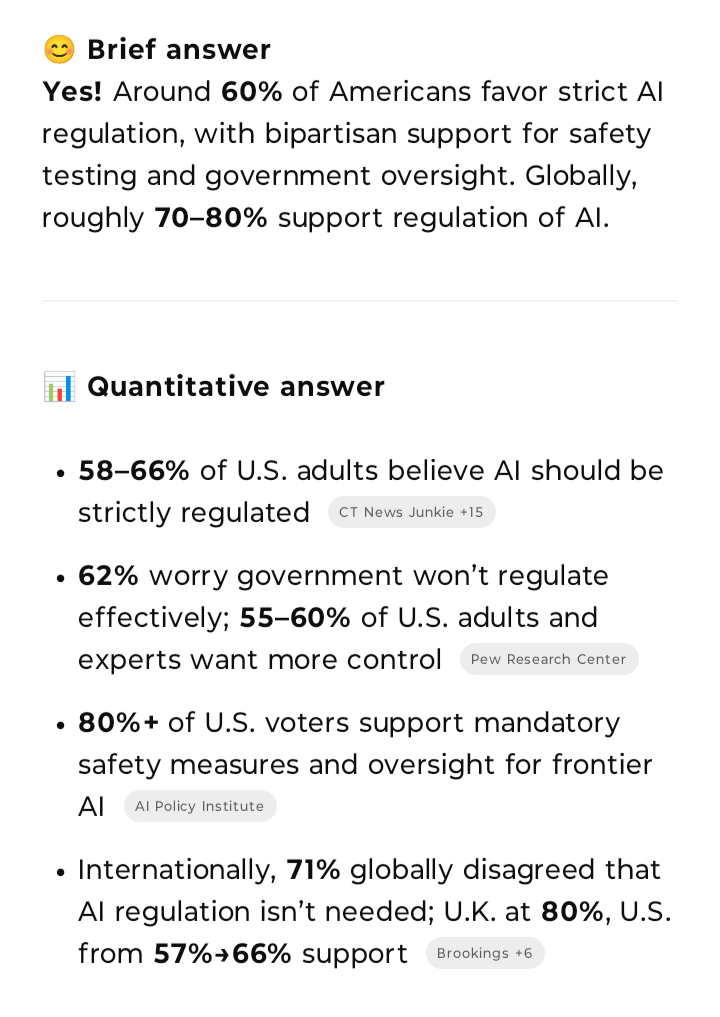

@TimothyJohnson5c16 Hmm, quick check with 4o:

My read is people do want regulation, are concerned about xrisk, and the reason we don't see serious regulation is arms race dynamics.

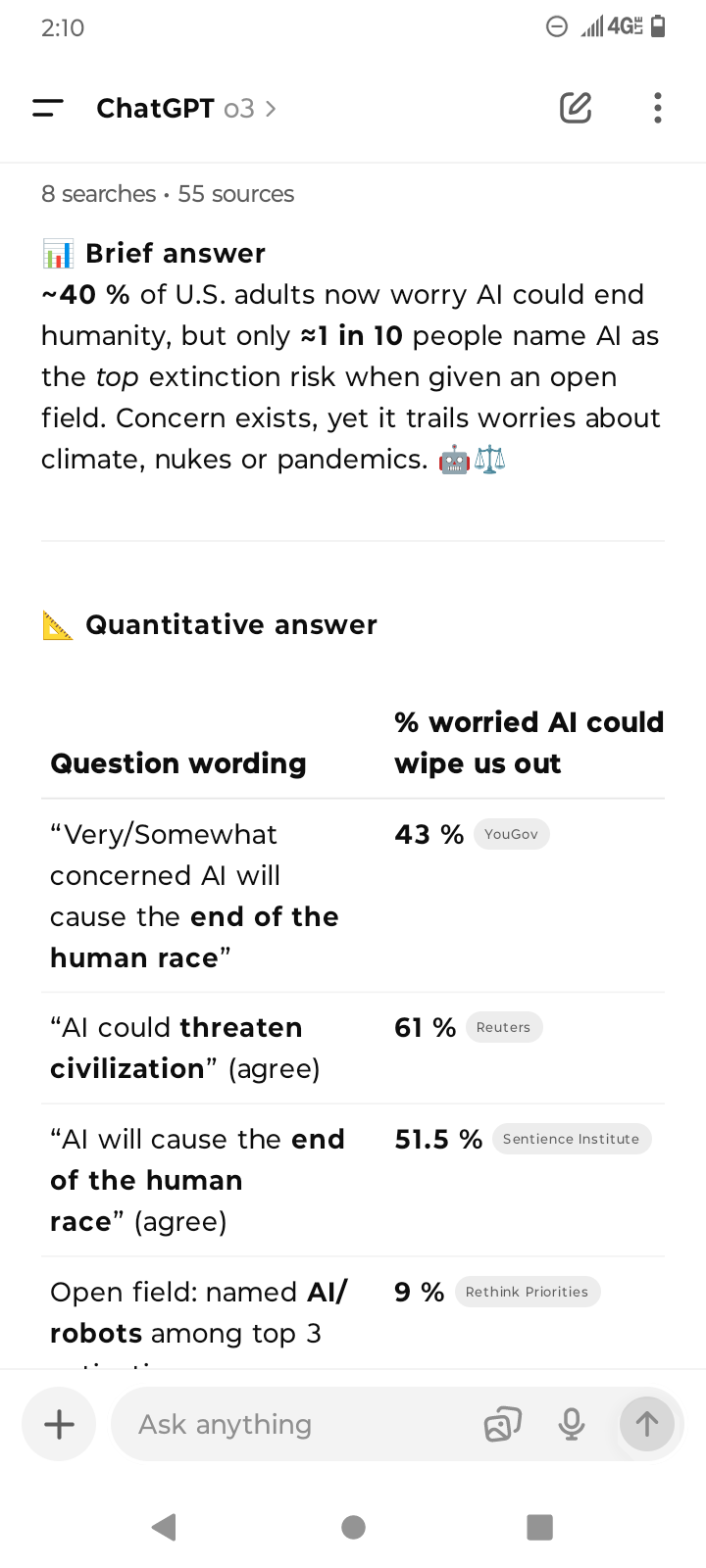

@jessald does 4o know how many ppl are concerned about xrisk from ai (excluding if possible global warming related)? my guess is it's much lower. ppl basically think everything should be strictly regulated, in surveys like this, i reckon (though i'm not very informed about survey modelling and stuff tbh), but xrisk from ai is not their primary concern

@Bayesian https://chatgpt.com/share/68814fc4-fad4-8007-aa64-18f31540e372

A bit fuzzier but I think people pretty much get it

Yudkowsky’s book is an at least plausible initiator for this. Starting to agree with other commenters that a lot of the prerequisite elements for this are in place (including large majorities with anti-AI sentiments, though at low salience). The priors are still very strongly against this happening though.

I think this market is a good operationalization of “politicians talk more about xrisk publicly as a result of Yudkowsky’s book”: https://manifold.markets/AdamK/will-an-elected-us-federal-official?r=QWRhbUs

@AshDorsey Very few people that I know in real life would even know what "existential risk" means. It will take a major AI disaster for them to start worrying about it.

@TimothyJohnson5c16 Musk and Bernie are both communicating about it pretty strongly. I feel like there's significant concern about AI company CEOs publicly stating what they're doing might kill everyone, and this will probably hit mainstream ~soon-ish, which I believe will mean this market will resolve yes, with or without people "truly" believing in it (many people see it as propaganda which isn't that unreasonable).

At this point, stuff is circulating around DC actively, people are speaking up on both sides of the aisle, and people are having significant negative reactions to captured value by large companies. I don't see how this won't result in people attacking the easiest criticism: AI has at least been admitted to be dangerous. Why would people shy away from that critique? I could be totally wrong, but I would be very surprised if this isn't a huge deal.

In an anecdote, someone I talk to who is very bearish on AI is slowly warming up to the idea of AI actually being a threat, although at this point they're far from believing AI is going to kill us all.

On the other hand, AI 2027 seems to indicate this will happen in late 2026, so perhaps I am really wrong, or perhaps politicians/influencers (in the broad sense of the term) are being smarter/more reactive than expected.

@AshDorsey I like @AdamK's market here: In January 2026, how publicly salient will AI deepfakes/media be, vs AI labor impact, vs AI catastrophic risks? | Manifold

I expect that the ordering of concerns there will continue to be Labor > Deepfakes > Catastrophic until at least the end of the year.

To that, I would add that there's a significant component of non-technical people who haven't figured out how to get AI to be reliably useful (mainly due to hallucinations) and have concluded that it's probably a scam.

@TimothyJohnson5c16 I agree that Labor > Catastrophic makes sense, but Catastrophic risks are so easy to attack and (near) universally seen as bad, where there are arguments against labor replacement and many people (especially business leaders) are looking forward to it. I guess my main statement here is that this market doesn't require xrisk to be first in the other market to resolve yes here.

@AshDorsey I guess it's up to Austin to decide, but I figured it's not "massive" unless catastrophic risks are the highest priority for most people.

@AhronMaline I disagree very much. Not because it would not be bad, but because it is a different issue, treated separately in discourse and requiring different measures. Also I think "xrisk" is a pretty clear label.

It would be nice if you could give some examples of things which would solidly count, just barely count, and just barely not count.

Some things which I think should be pretty far from counting:

OpenAI and xAI talk much more about x-risk and Bannon talks much more about this too. Employees at AI companies seem much more sold. (But no other substantial changes.)

Talking about x-risk from AI becomes substantially more common on X/twitter, but there aren't other substantial changes.

Things which seem more borderline and I'm unsure about (probably shouldn't count?):

There is a big leftist only preference cascade to start caring a bunch more about x-risk and generally being more anti AI. It's one of the main thing that Sanders and AOC talk about for some period. It also becomes very popular on bluesky. But, it doesn't spread beyond this.

David Sacks and the AI related parts of the Trump admin start saying things about x-risk which are much more sympathetic than they currently are and it becomes much more accepted on right wing tech twitter. AI companies piggy back on this to say much more about x-risk. There is substantially more discussion of AI being bad and an x-risk from the populist right. The left doesn't really change much. (Wouldn't qualify as massive?)

A clearly massive preference cascade toward caring much more about x-risk but which mostly doesn't manifest as being anti-AI.

huh, I'm a bit surprised that so much of the Manifold community think this is unlikely; anyone want to make a quick case for why?

I think I'm 50%+ on this, based on things like: the recent congress hearings, the miri book, the fact that my parents are now like "oh I'm glad you're working on safety", people saying their Uber drivers have takes on xrisk...

@Austin Nothing ever happens, AI is low salience, I expect the MIRI messaging to only work somewhat narrowly (but lower confidence about this last one).

@Austin Timeline is way too soon for AI-specific x-risk to become a high priority issue even among informed elites. Prob should be under 5%.

@Austin That said, your res sources seem to be centered on people who already are the likeliest people int the entire world to already think AI X-risk is a big deal, so... maybe I'm being foolish here? Or maybe them moving significantly further on AI X-risk is thereby less possible because they're already informed?

@Austin Most of the mainstream anti-AI sources I've seen just say that it's not even that smart, which doesn't mesh very well with concerns about x-risk.